Summary

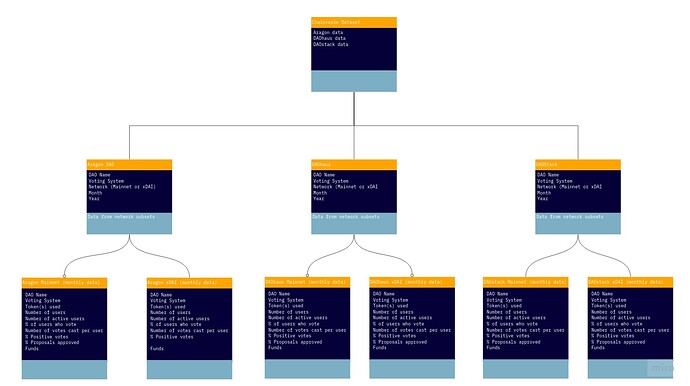

With the increasing launch of DAOs, there’s value in extracting events, transactions, and interactions from the Ethereum blockchain for analysis of governance, community, and protocol evolution. Tools like Dune Analytics and The Graph provide an interface to query indexed blockchain data and share code and results.

However, DAO transaction data is yet to be standardized or easy to construct meaningful datasets from. Without familiarity of a specific smart contract it can be challenging to write a query that returns accurate, trusted results. Knowing which source data to target for metric calculate also requires understanding of the DAO project: governance framework, launch process, tokens/funding source, etc.

With this proposal I will develop a data pipeline of DXdao launch metrics from the manifesto, document the process, and contribute the code for Diamond DAO. This can then be used as a template for other DAO projects we wish to include in our datasets.

Proposal

With this funding proposal I will deliver our MVP capability of:

- DAO dataset curation

- Calculating DAO meta metrics

- Developing useful tutorial content

I will use Dune Analytics, The Graph, Etherscan, and any other tools to accelerate development.

Progress, questions, and discussion will be tracked in this Discourse thread: DAO Data Engineering

I may request help/input from other Diamond DAO members with web3/data mining experience as I scale the learning curve.

Deliverables include:

- Reproducible query code that returns DXdao launch metrics (scope in thread)

- “How to” article/blog post and documentation

- Any 3rd party dashboards, tool configurations, etc developed to complete the work

Estimated completion time: October 2021

Applicant: 0x97b9958faceC9ACB7ADb2Bb72a70172CB5a0Ea7C

Shares: 50

Payment requested: 1000